Newsroom

Making open data reusable: Q&A with bioinformatician Dr. Paul Pavlidis

Dr. Paul Pavlidis

New technologies across many scientific fields are leading to mass data collection in research. With this mass data collection also comes the need for a means to organize and analyze this large amount of data, as well as make it more accessible to other researchers. This is a challenge that bioinformaticians like Dr. Paul Pavlidis are focused on addressing.

Dr. Pavlidis (Michael Smith Laboratories, Department of Psychiatry) leads a bioinformatics research lab with a particular focus on data related to gene expression in the nervous system. The Pavlidis lab also looks at methods for taking large collections of data in this field and making these more reusable for researchers.

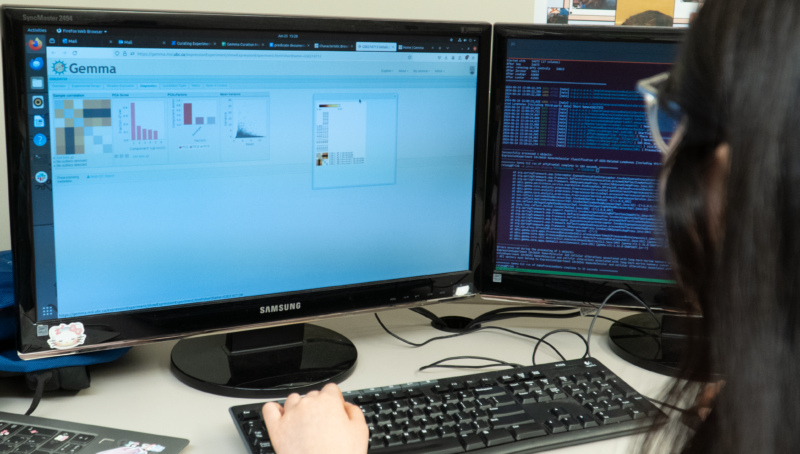

With recent advances in their work, and an upcoming 20th anniversary celebration for their ‘Gemma’ database, we took the opportunity to speak with Dr. Pavlidis about his lab’s work and the significance of open access to scientific data.

What challenges in ‘omics research are your lab currently working to address using bioinformatics?

While we study and use many kinds of ‘omics data, our focus is understanding gene function in the brain. We also collaborate with wet-lab groups studying brain development and disorders of the nervous system.

Through this work, we are interested in quite basic questions about what information is contained in genomics data and how to best explore it. Since a lot of transcriptomic studies assay thousands of genes, resulting in lists of genes to investigate, an ongoing problem is ‘now what’? This problem of interpreting gene hit lists has long been an interest of mine. For example, with a former postdoc in my lab, Dr. Jesse Gillis, we identified genes that appear frequently on these gene lists, which only become apparent when you start to analyze hundreds of diverse studies. For a biologist faced with their individual gene list, it’s helpful to know whether the gene they’re looking at has been previously ‘discovered’, or if it’s specific to their biological question. For example, in recent work we have found genes that come up in 30% of studies across all areas of genomics, which is pretty wild. It’s harder to know what’s surprising and novel without that contextualization.

This general philosophy of focusing on a single data type in detail by analyzing hundreds (or thousands) of data sets is common in my lab, and this work allows us to apply what we learn to more specific questions. We recently completed a study of how transcriptional regulators are ‘co-expressed’ with the genes they regulate through analysis of hundreds of billions of data points from other studies. Our deep-dive on this subject helped us gather information for forming new predictions of those regulatory relationships, as well as which regulators this approach can or can’t work for. We are now able to shift to even more specific settings, such as within particular cell types for particular regulators, and within the context of specific diseases like Alzheimer’s and schizophrenia.

What is ‘open science’, and why is it important?

Open science can be defined in different ways, and I like to point people at a recent UNESCO recommendation which also sets out a lot of rationales for open science in academia and the private sector, and open data in particular. When publicly funded research data outputs are made freely available for reuse, there’s a lot more value in that, and more of a return on the initial investment. Traditionally, though, open science is not the default, and funding agencies, publishers, and research institutions have had to create policies to move in that direction that have had varied success. While there’s still a way to go on this front, I do think we’re seeing a gradual shift as younger scientists seem more likely to treat openness as a default.

At a practical level, the ‘omics revolution of the last 25 years would not have happened as it did without widespread data sharing. A great example, used by many researchers at the MSL, is the bedrock GenBank database of DNA sequences. It was established in the public domain by the US Government starting in the 1980s, and I don’t even want to think about what bioscience would look like without it. It’s like running water; it’s easy to forget how much we depend on it.

What is Gemma, and how does it support and facilitate open data access in ‘omics research?

Gemma is a database and software framework specifically designed for the reuse and sharing of transcriptome data. While it’s one thing to make data open in a basic way, it’s another thing to actually be able to use that data for different applications. Back in the early 2000’s, the importance of this was clear to me as I tried to reuse data sets that had been uploaded to various scientists’ web sites and public data repositories. Effort is needed to do what we call ‘harmonizing’ the data, so results of experiments coming from different laboratories can be meaningfully combined or compared. Which brings me to the second motivation for Gemma, which is to facilitate data analyses that use not just one data set, or two, or ten, but thousands of data sets from thousands of laboratories around the world. By having a lot of data, you can find signals that are weak in a single data set but supported by others, and individual findings can be better contextualized. In short, with Gemma we turn data sets that are merely ‘open’ to ones that are more ‘reusable’.

We’ve gone through this harmonization process for well over 19,000 experiments (and many more are ‘in the pipeline’), relying on the effort of human curators (almost all undergraduates from the UBC Science co-op program) and a lot of computation. Now anybody can use Gemma to find and download nice and tidy data sets that are ready for use in different kinds of analysis. It’s something my own students rely on a lot for their own work, with growing numbers of outside users as well.

As Gemma approaches its 20-year anniversary, what functionalities do you hope to enable next through the platform?

We’re always adding data to the database and new features to the software, and love to hear from users what they want to see next. The big thing we’re working on now is being able to take in single-cell RNA-sequencing data. Almost everything about it is different from more common forms of data collected using ‘bulk’ samples, which has been the norm for 25 years. We need to set up new data import, analysis and curation procedures, and we’re in the midst of that work. But, we should have our first single-cell data sets well ahead of the 20th anniversary next spring!

What do you see as the next stage in the evolution of bioinformatics research?

It’s pretty common to talk about the AI revolution, and we’re all amazed by how far things have come over the last few years. I think that’s going to continue to have an impact in many ways, and some parts of computational biology already look nothing like they did ten years ago.

I do also like to stress the importance of data. I’ve always said that the best bioinformatics is based on the best data, but that data also has to be the right data for the questions being asked. This means it’s not as simple as the common idea of ‘garbage in, garbage out’. Unfortunately, one of the conclusions we’ve made from our research is that we often do not have the right data in genomics research. It’s not that the data is ‘garbage’, but it’s unable to answer the relevant research questions as neatly as we’d like. To this end, my view is that the evolution of bioinformatics research right now is driven by single-cell methods, and related high-throughput screening approaches of genome function enabled by methods like CRISPR. These are changing the kind of information we have access to in important ways, allowing us to have the ‘right’ data by giving us higher resolution and details that are critical to know.

Bioinformatics continually adapts to the appearance of new types of biological data, not the other way around, so the next 20 years are going to be exciting.

Quick links:

- Read the full study from Drs. Pavlidis and Gillis here: https://www.pnas.org/doi/full/10.1073/pnas.1802973116

- Visit the Gemma database at: https://gemma.msl.ubc.ca/home.html